Math and Statistics for Ediscovery Lawyers Using Technology Assisted Review (TAR)

Legal professionals learn a lot of complicated concepts and principles through education and/or practice… but they usually don’t have much—or anything—to do with math or statistics.

Then, along came Technology-assisted Review (TAR), which promises to revolutionize ediscovery by leveraging sampling techniques and advanced algorithms to predict whether documents are responsive to particular criteria. Simply put, these revolutionary technologies rely heavily on math and statistics—and modern practitioners need to be more tech- and math-savvy than ever before (or be willing to engage the appropriate predictive coding experts or other resources) in order to understand and leverage this new methodology to its highest potential.

However, to master the TAR process, legal professionals don’t need to dust off their slide rules and graphing calculators, crack open a stats book, or flock to the nearest college or university to enroll in a math class. Rather, they simply need to focus on understanding the key concepts and metrics necessary to manage the predictive coding process.

To help put you on the path to TAR technology mastery, here is a cursory overview of the metrics and processes you need to know.

Key Metrics in Effectiveness Reporting

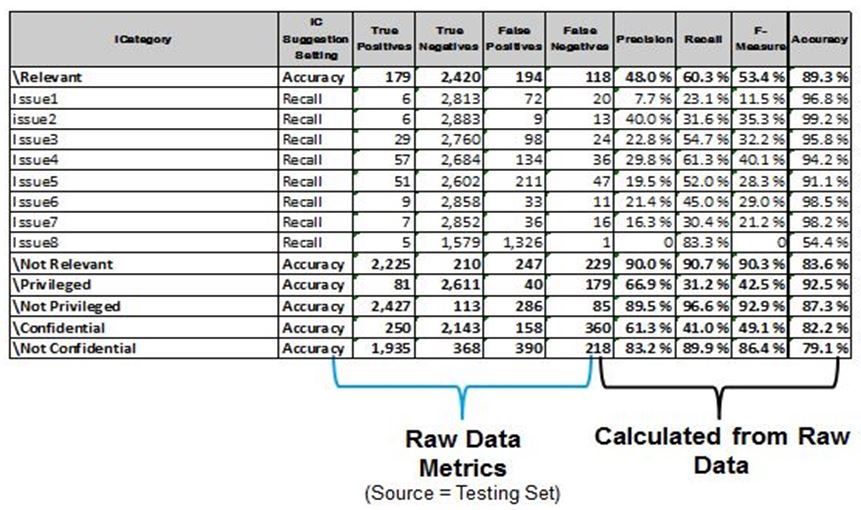

After the technology has run machine learning, it will generate a report with raw data metrics and calculations that should look something like this:

Understanding the metrics on this report is key to analyzing your technology’s performance and determining what to do next.

True and False Positives/Negatives In search or review exercises, “responsive” or “not responsive” classifications are reviewed. When the document is suggested as “responsive,” and the suggestion is correct, this is referred to as a True Positive; when it is incorrect (i.e., a non-responsive document is incorrectly coded as responsive), it is a false positive. Accordingly, when a document is suggested as “not responsive,” and the suggestion is correct, this is an example of a true negative; if the suggestion is incorrect (i.e., it should have been coded “responsive”), then it is a false negative.

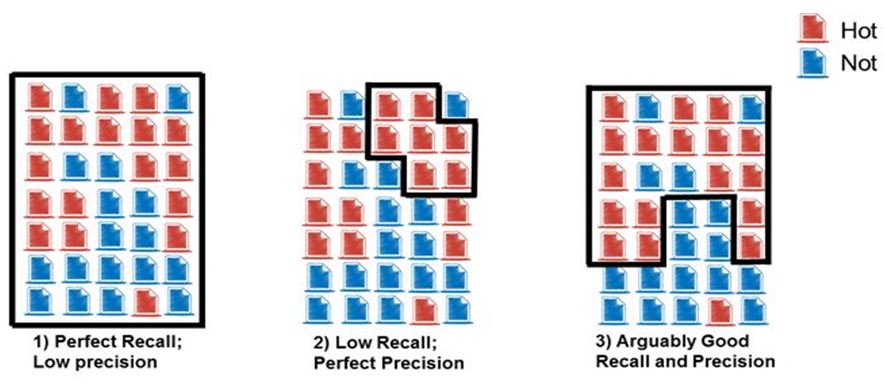

Recall, Precision, and F-measure While these three key metrics have been discussed previously, understanding these metrics is essential to successfully, effectively and efficiently employing predictive analytics for document review. Generally speaking, precision is the fraction of relevant documents within retrieved results—essentially a measure of exactness. Recall is the fraction of retrieved relevant documents, or the measure of completeness. F-measure is the harmonic average between the system’s recall and precision.

Accuracy Accuracy incorporates how well the classifier did by identifying the fraction of correctly coded documents, essentially expressed as (True Positives + True Negatives) / (All Documents). While accuracy can be helpful, it should not drive decisions. Accuracy can be skewed upward if there is an overwhelming amount of either true positives or true negatives in the database.

Analyzing Technology-Assisted Review Metrics with Sampling

Sampling is one of the most versatile tools in your technology-assisted review arsenal. The sampling process examines a fraction of the document population to determine characteristics of the whole, further validating what you do or don’t have and strengthening the defensibility of your review processes and procedures. Notably, it is often used to perform quality control (QC), which can take place iteratively, or at the back end of a review to assess it.

Quality control rests on a simple principle: TAR predictions are not always right. Through sampling, various sets of the data are drawn, manually reviewed by a quality control team, and evaluated. Based on these results, teams can decide whether additional training is needed, or the team might conclude that the technology is categorizing documents so effectively that they are comfortable relying wholly on machine predictions and stopping manual review.

The Next Step: Mastery

While general knowledge of these predictive coding metrics is a great start, it is merely a drop in the bucket. To learn more about mastering the math behind the TAR technology, don’t miss the May 10th webinar hosted by Kroll Ontrack and ACEDS, MATH & STATS 101: What Lawyers Need to Know to (Properly) Leverage TAR.